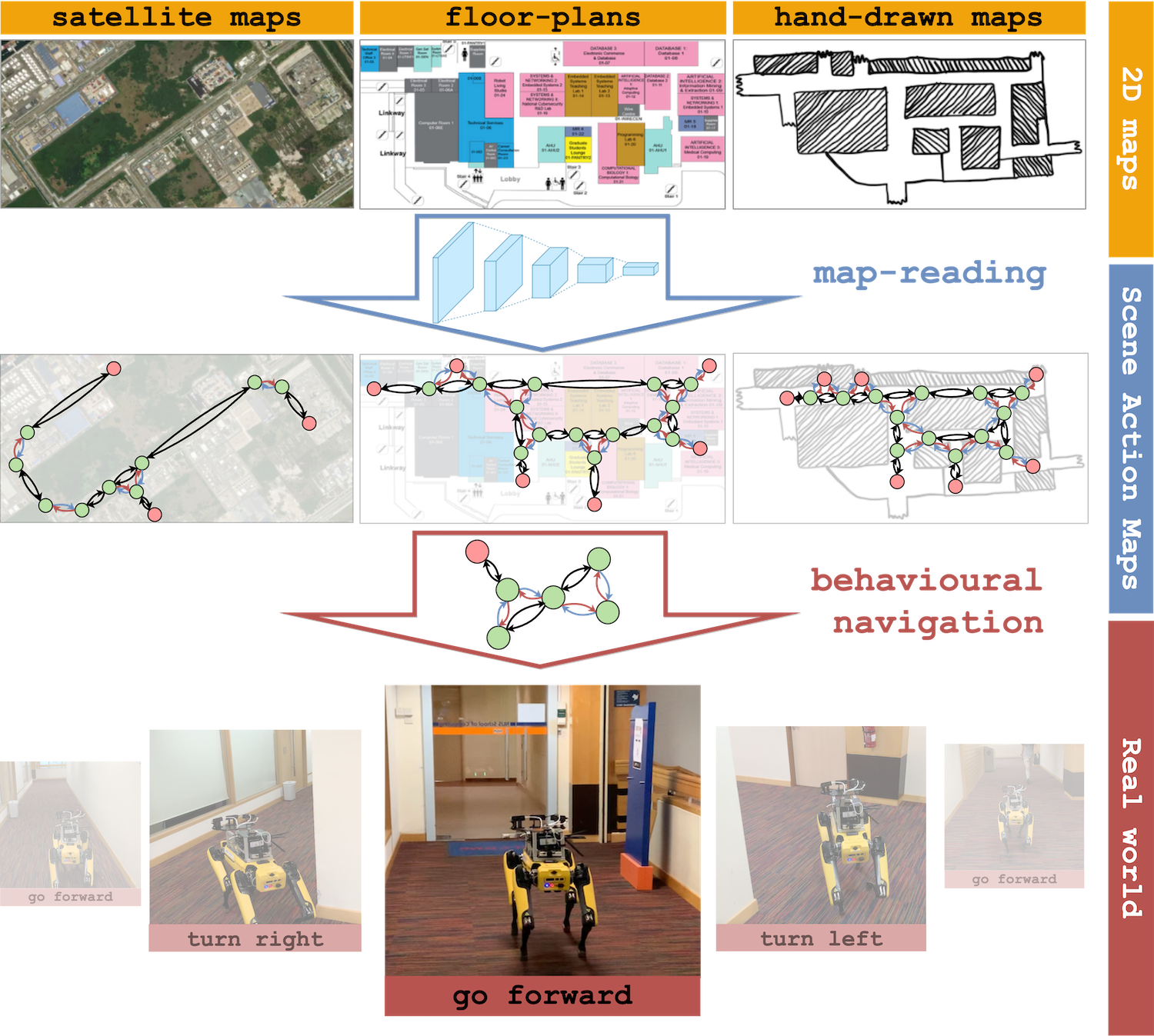

Humans are remarkable in their ability to navigate without metric information. We can read abstract 2D maps, such as floor-plans or hand-drawn sketches, and use them to plan and execute routes in rich 3D environments. We posit that this is enabled by the ability to represent the environment abstractly as interconnected navigational behaviours, e.g., "follow the corridor" or "turn right", while avoiding detailed, accurate spatial information at the metric level. We introduce the Scene Action Map (SAM), a behavioural topological graph, and propose a learnable map-reading method, which parses a variety of 2D maps into SAMs. Map-reading extracts salient information about navigational behaviours from the overlooked wealth of pre-existing, abstract and inaccurate maps, ranging from floor-plans to sketches. We evaluate the performance of SAMs for navigation, by building and deploying a behavioural navigation stack on a quadrupedal robot.

@inproceedings{Loo-SAM-ICRA-2024,

author = {Joel Loo and David Hsu},

title = {{Scene Action Maps: Behavioural Maps for Navigation without Metric Information}},

booktitle = {International Conference on Robotics and Automation (ICRA)},

year = {2024},

}